GitHub Copilot has recently taken the software engineering world by storm, hitting a milestone of $100M ARR. This achievement alone qualifies it to be a publicly listed company. Meanwhile, funding continues to flow into code-focused LLM use cases.

LLMs are causing a stir in the software engineering community, with some developers praising the technology and others fearing it. The controversy surrounding LLMs is so intense that it has even led to a lawsuit against GitHub Copilot.

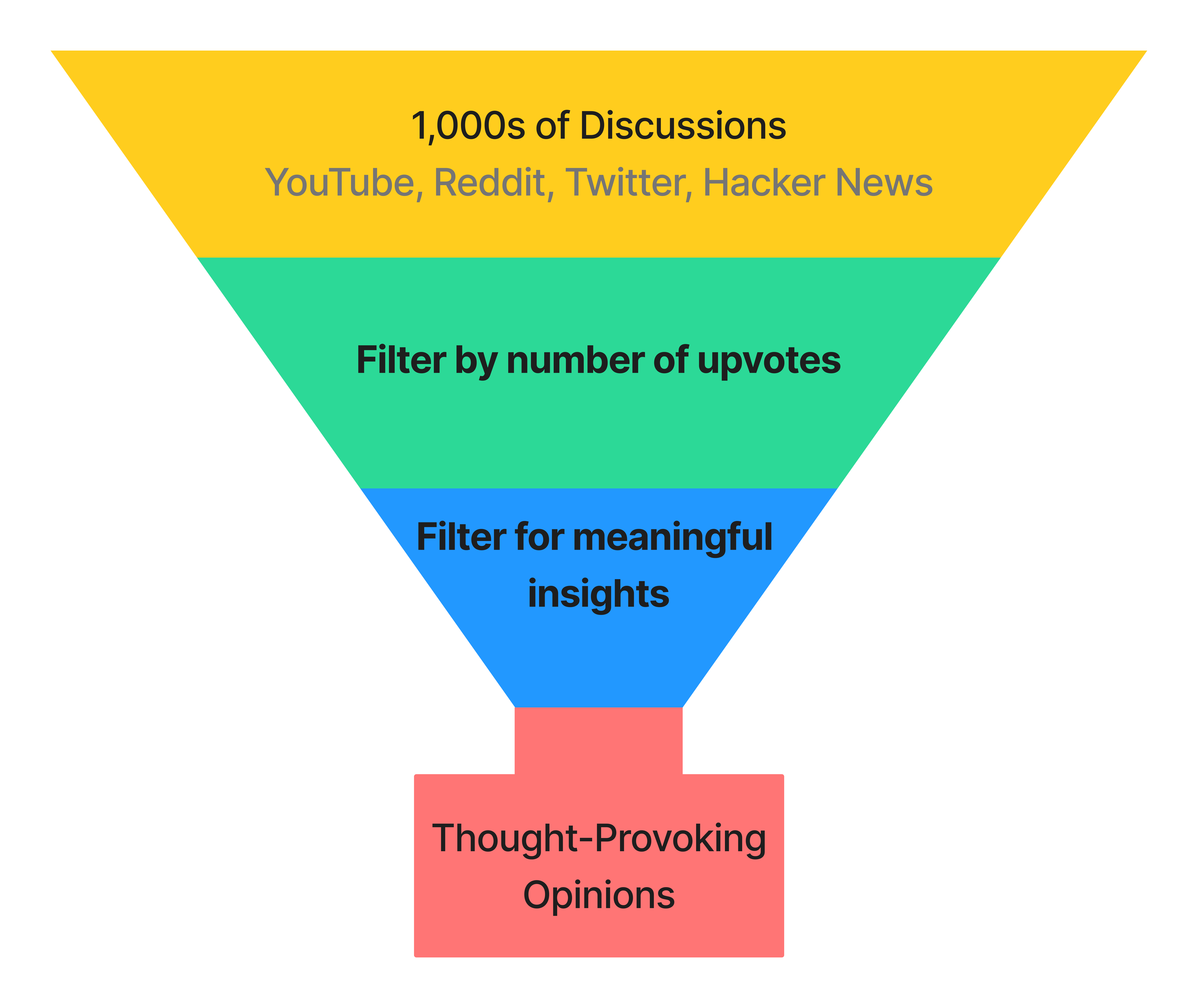

To understand how developers are receiving Copilot, I went to where developers live: Reddit, Twitter, Hacker News, and YouTube. I parsed 1,000s of discussions and synthesized my findings in this article, striving to present only thought-provoking opinions.

We are aware that GitHub Copilot was trained on questionable data (see GitHub Copilot and open source laundering) and that there is ongoing controversy surrounding the technology. However, this article is not about the ethics of LLMs. Instead, it is focused on product feedback from developers.

The ethics of LLMs and training data is a whole other discussion that we will not be covering in this article. And quite frankly, I am not qualified to comment on the ethics of LLMs.

We have no affiliation with GitHub Copilot, OpenAI, or Microsoft. We are not paid to write this article, and we do not receive any compensation from the companies.

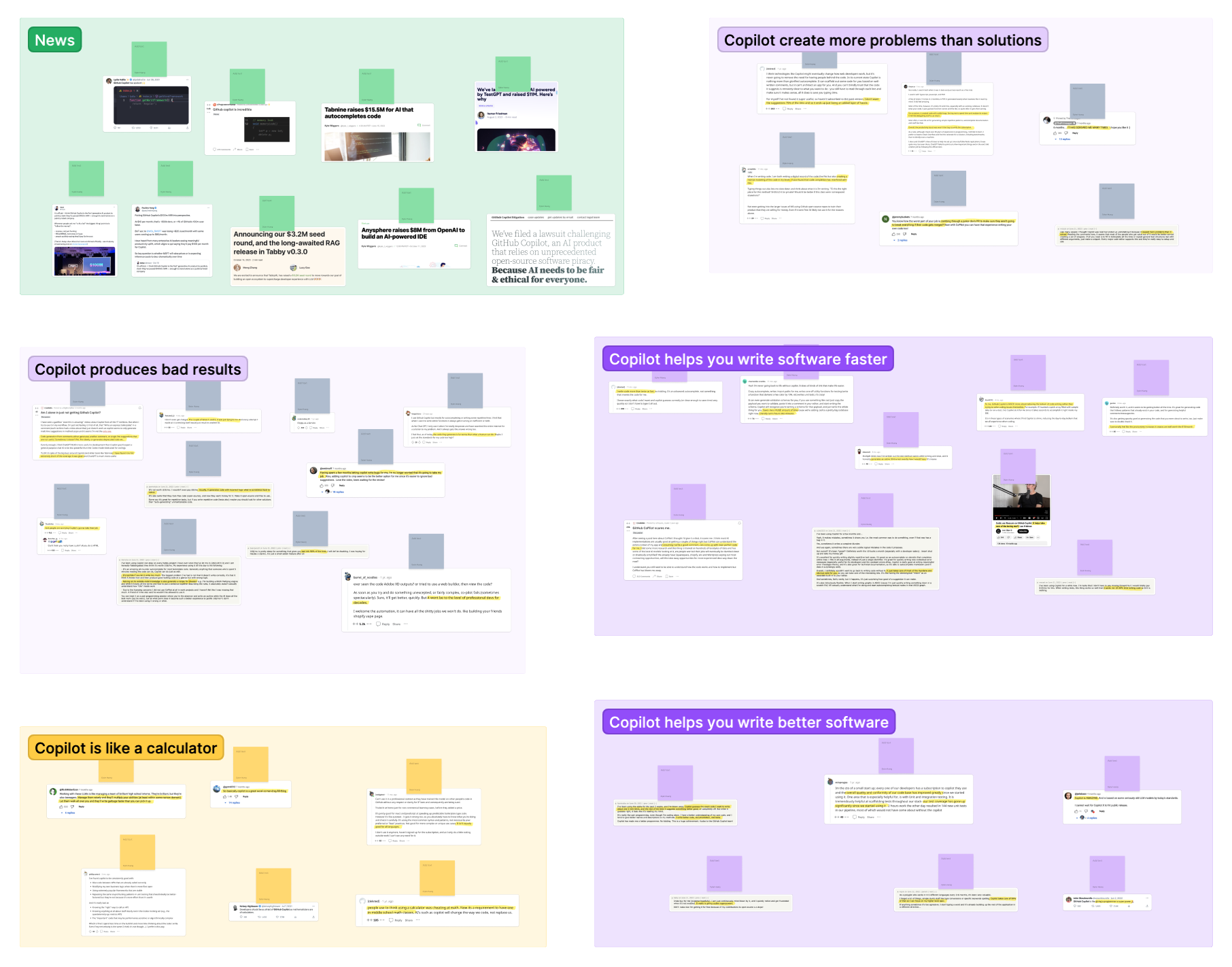

Next, I transcribed these discussions onto a whiteboard, organizing them into "Anti-Copilot" (👎), "Pro-Copilot" (👍), or "Neutral" (🧐) categories, and then clustering them into distinct opinions. Each section in this post showcases an opinion while referencing pertinent discussions.

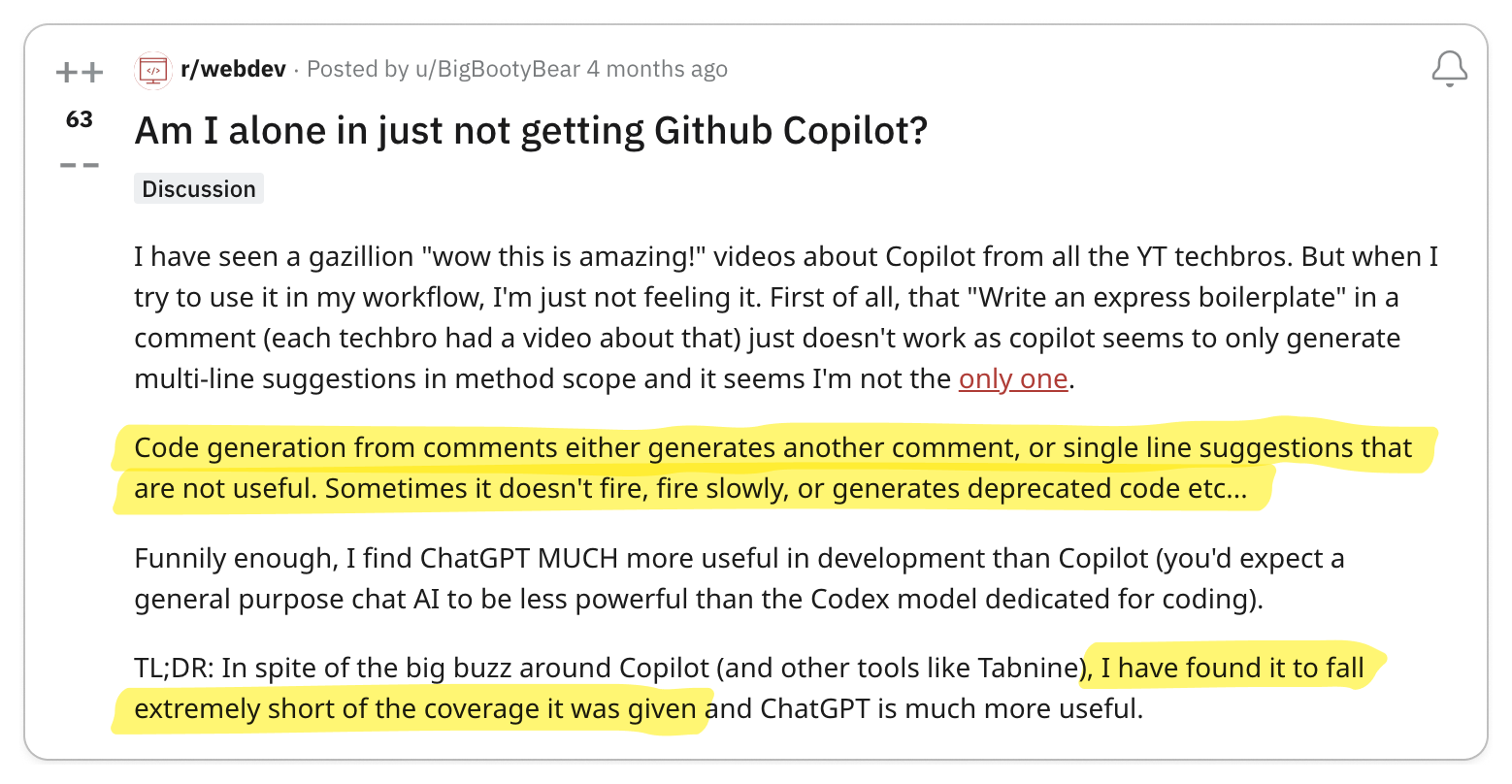

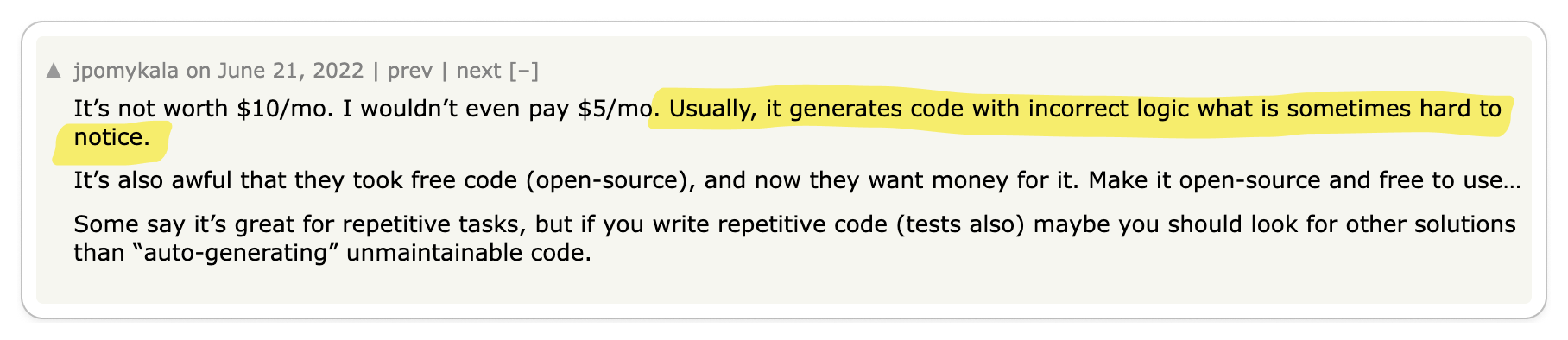

👎 Copilot produces bad results

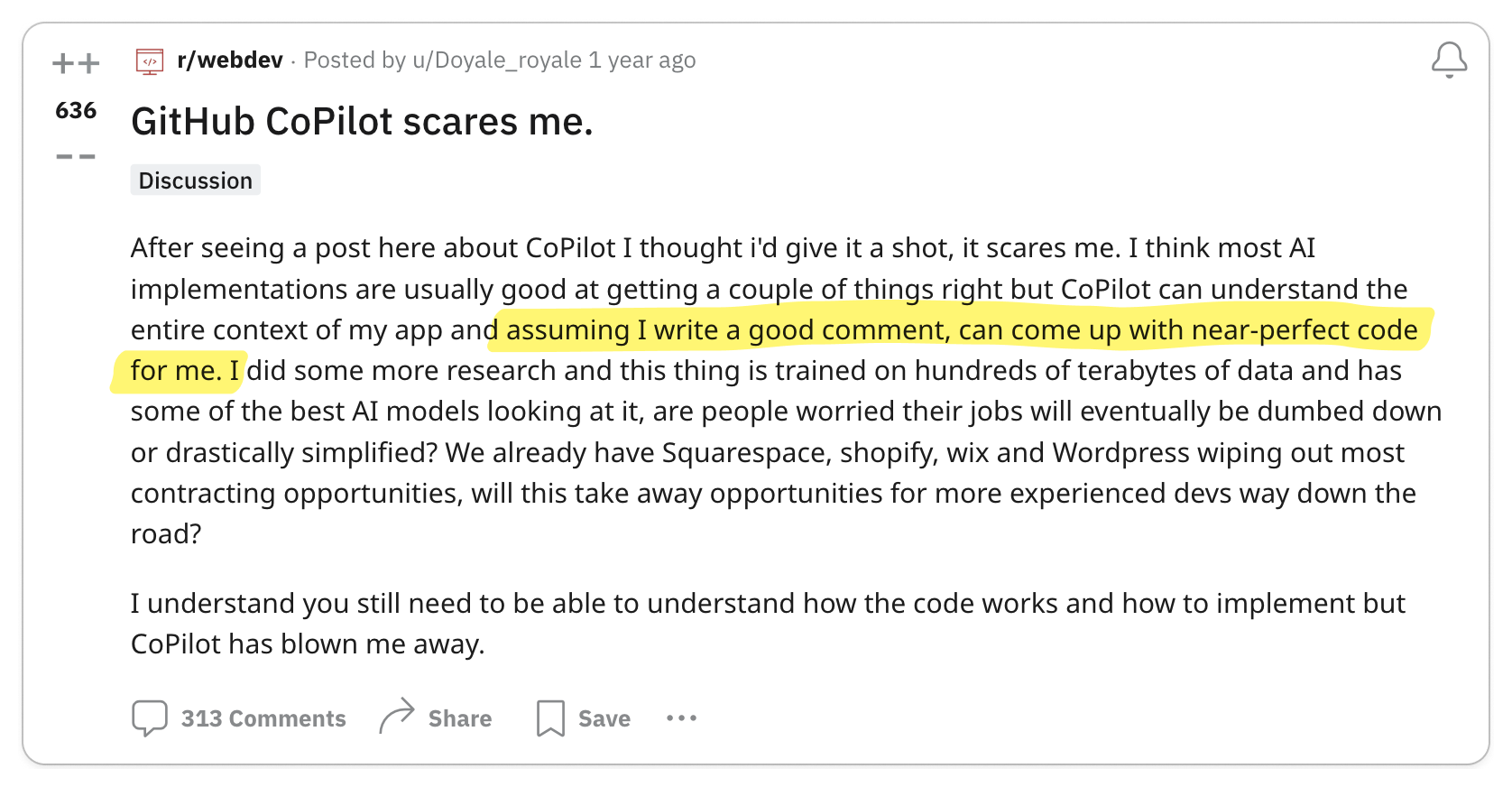

LLMs operate as probabilistic models, implying they aren't always correct. This is especially true for Copilot, which is trained on a corpus of code that may not be representative of the code that a developer writes. As a result, Copilot can produce consistently bad results.

Key Takeaway 🔑

Developers expect reliability from their tools.

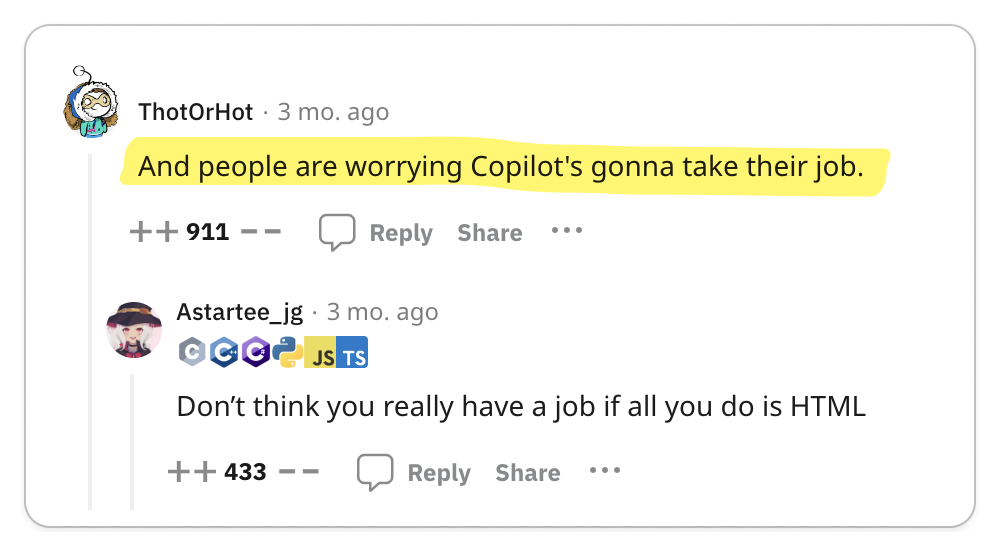

Copilot is not reliable, and therefore, certain developers have a tough time wrestling with its output. Copilot lies or produces bad results for a vast majority of the time. This can be exceedingly frustrating for developers who are expecting Copilot to deliver on its promise of writing code for you. After some bad experiences, some developers have even stopped using Copilot altogether.

For people who worry about job security, fear nothing, because Copilot is not going to replace you anytime soon.

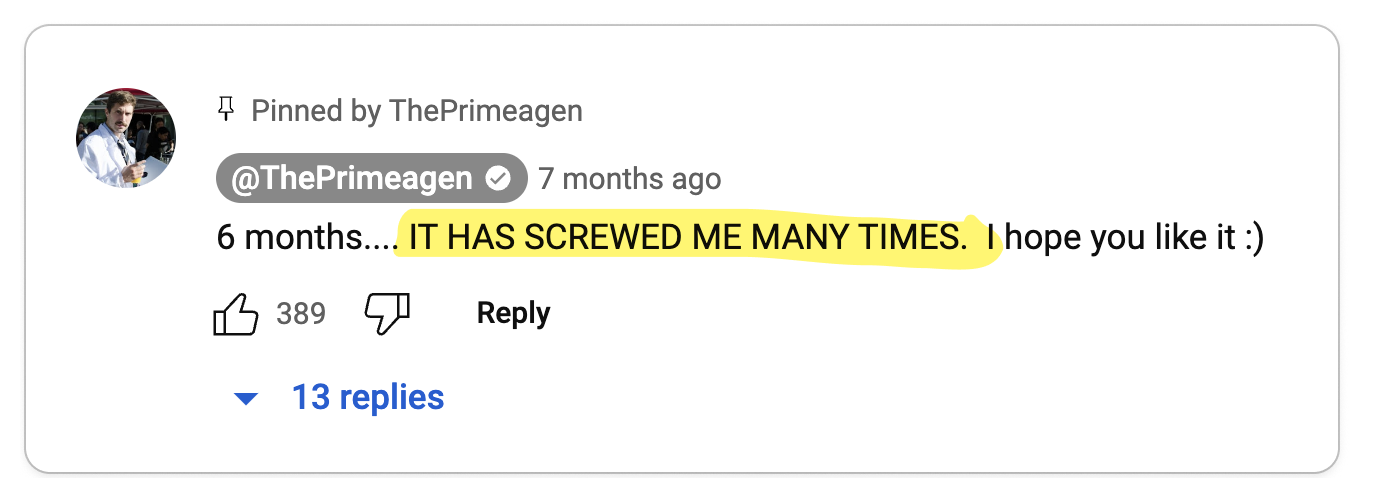

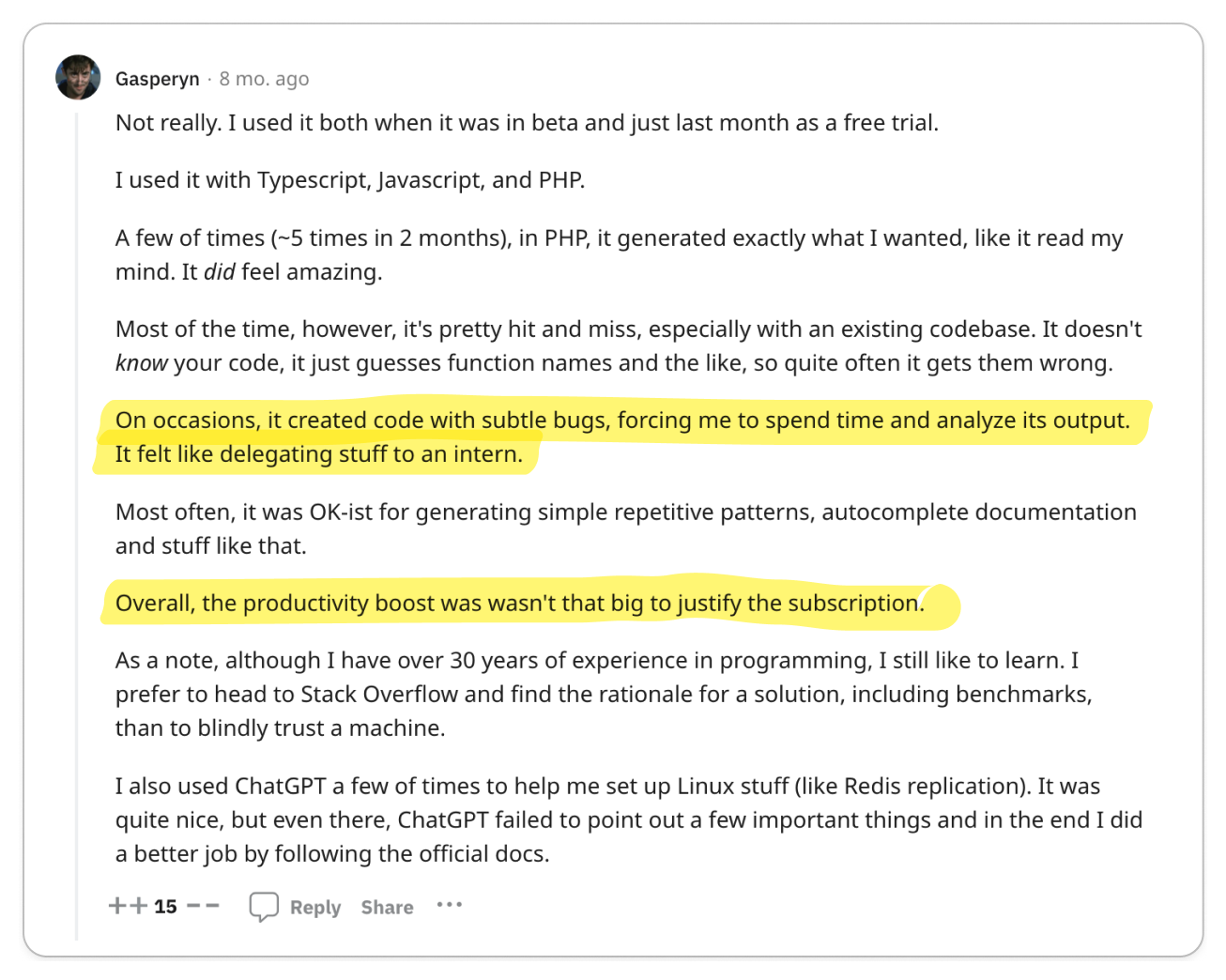

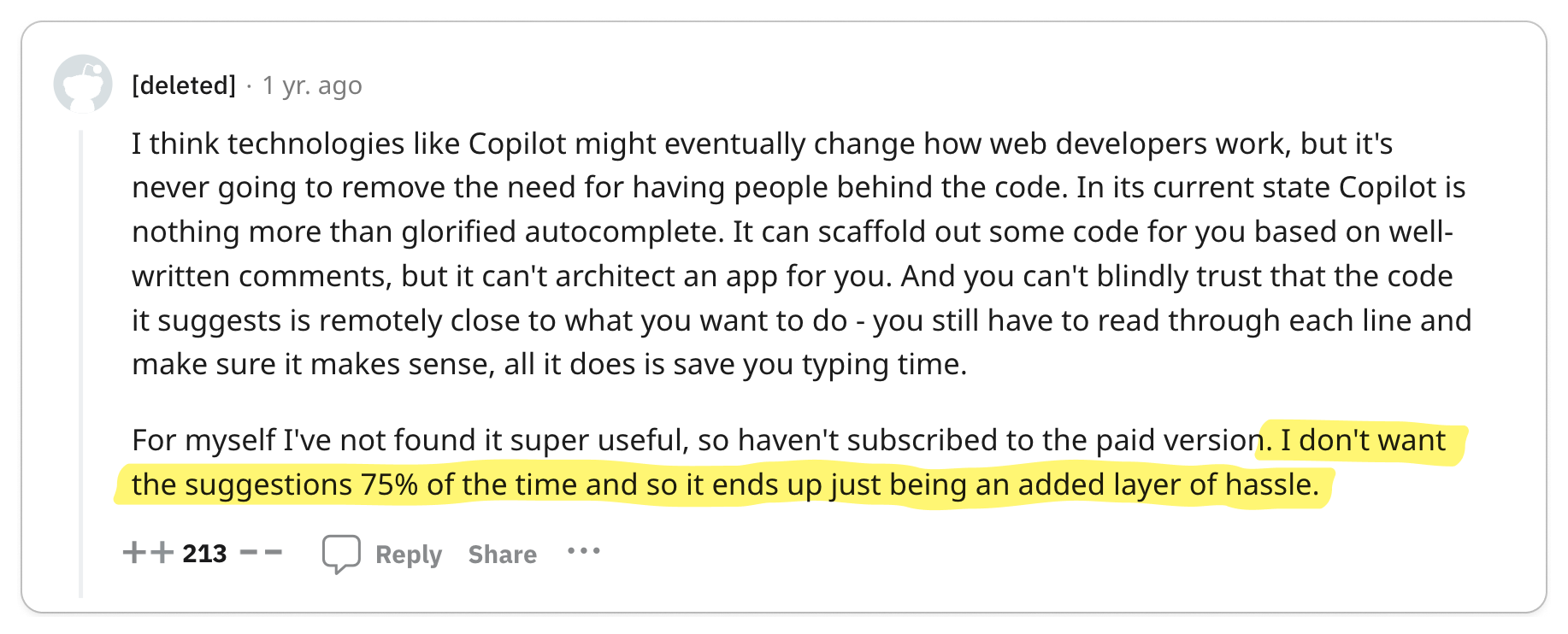

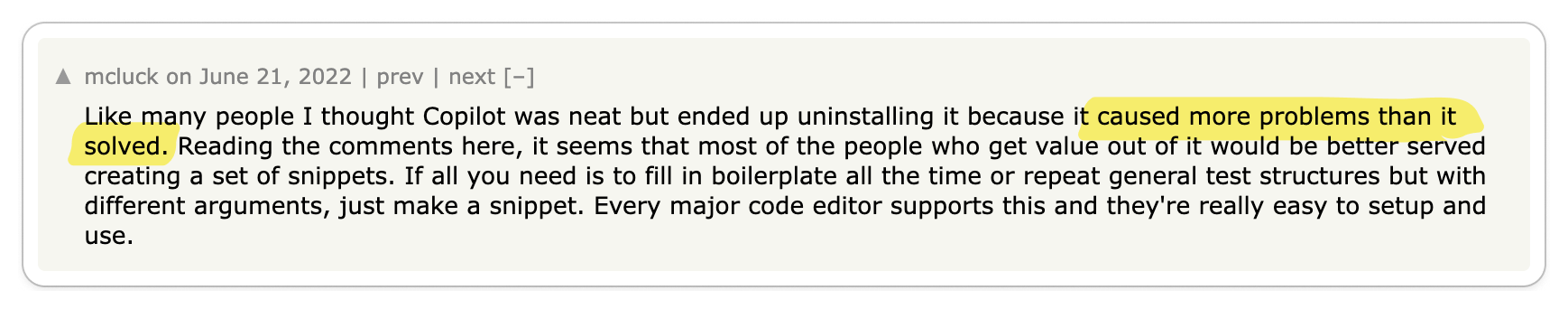

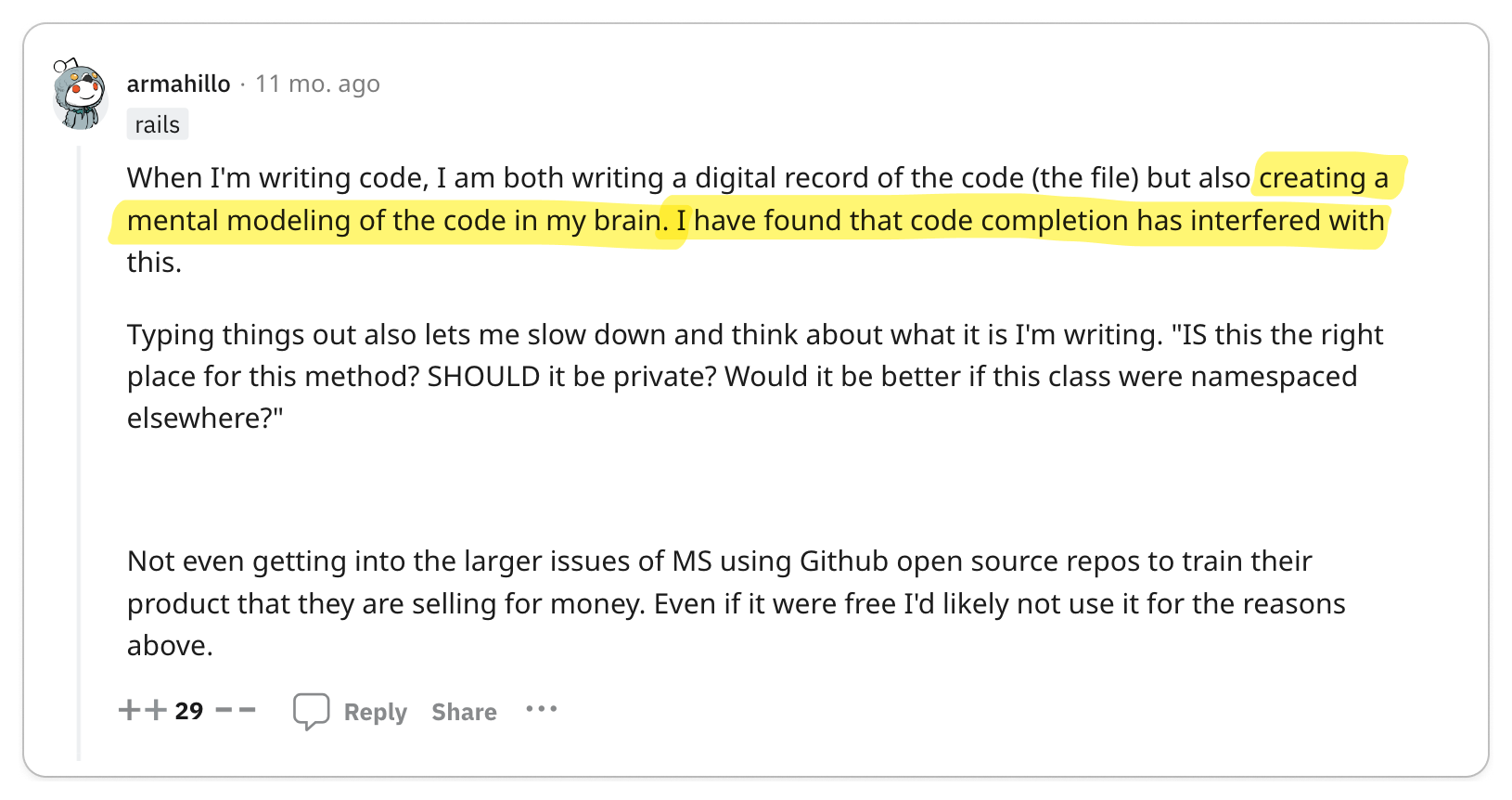

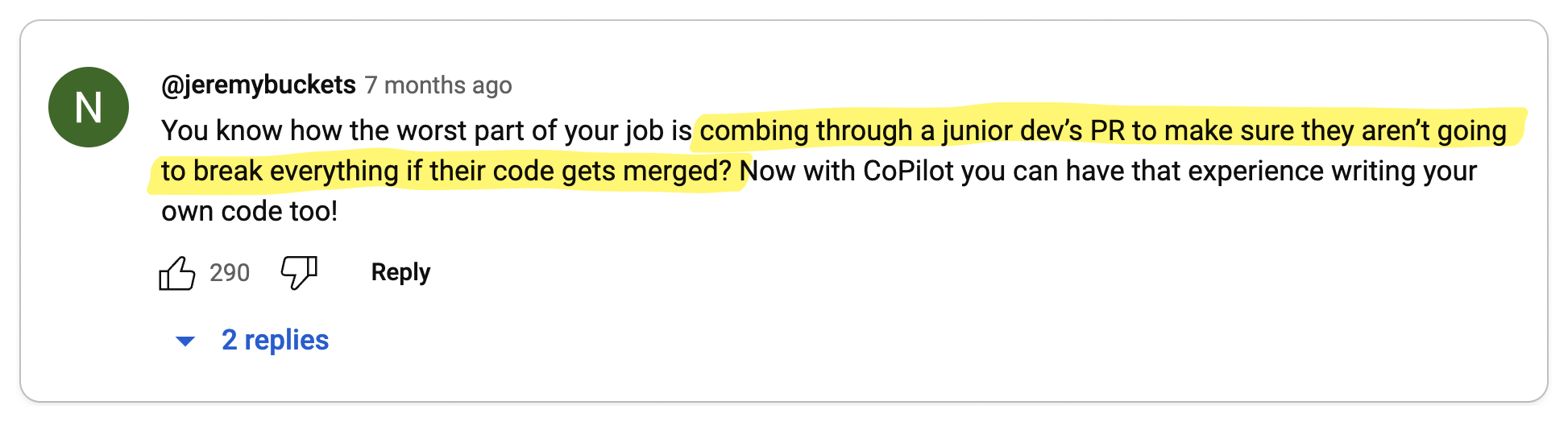

👎 Copilot creates more problems than solutions

Copilot is a tool that is supposed to help developers write code. However, its unreliable results creates more problems than solutions.

Key Takeaway 🔑

Copilot can waste your time.

Code requires 100% accuracy, and inaccuracy can lead you down a rabbit hole of debugging. Often wasting time or flat out breaking your code. In some cases, this is frustrating enough for developers to stop using Copilot altogether. Just like managing a junior developer, Copilot requires a lot of oversight. Sometimes subtle bugs can take more time to debug and fix than writing the code yourself. For some developers who find the output to be too inaccurate, Copilot becomes an interference and ultimately doesn't save them any time.

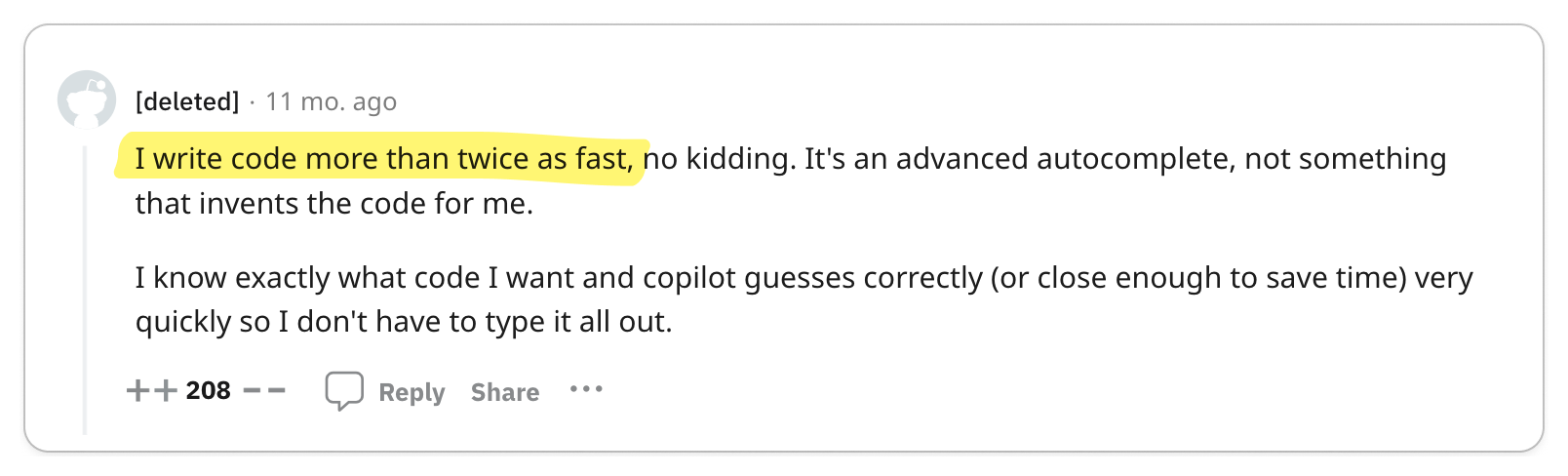

👍 Copilot helps you write software faster

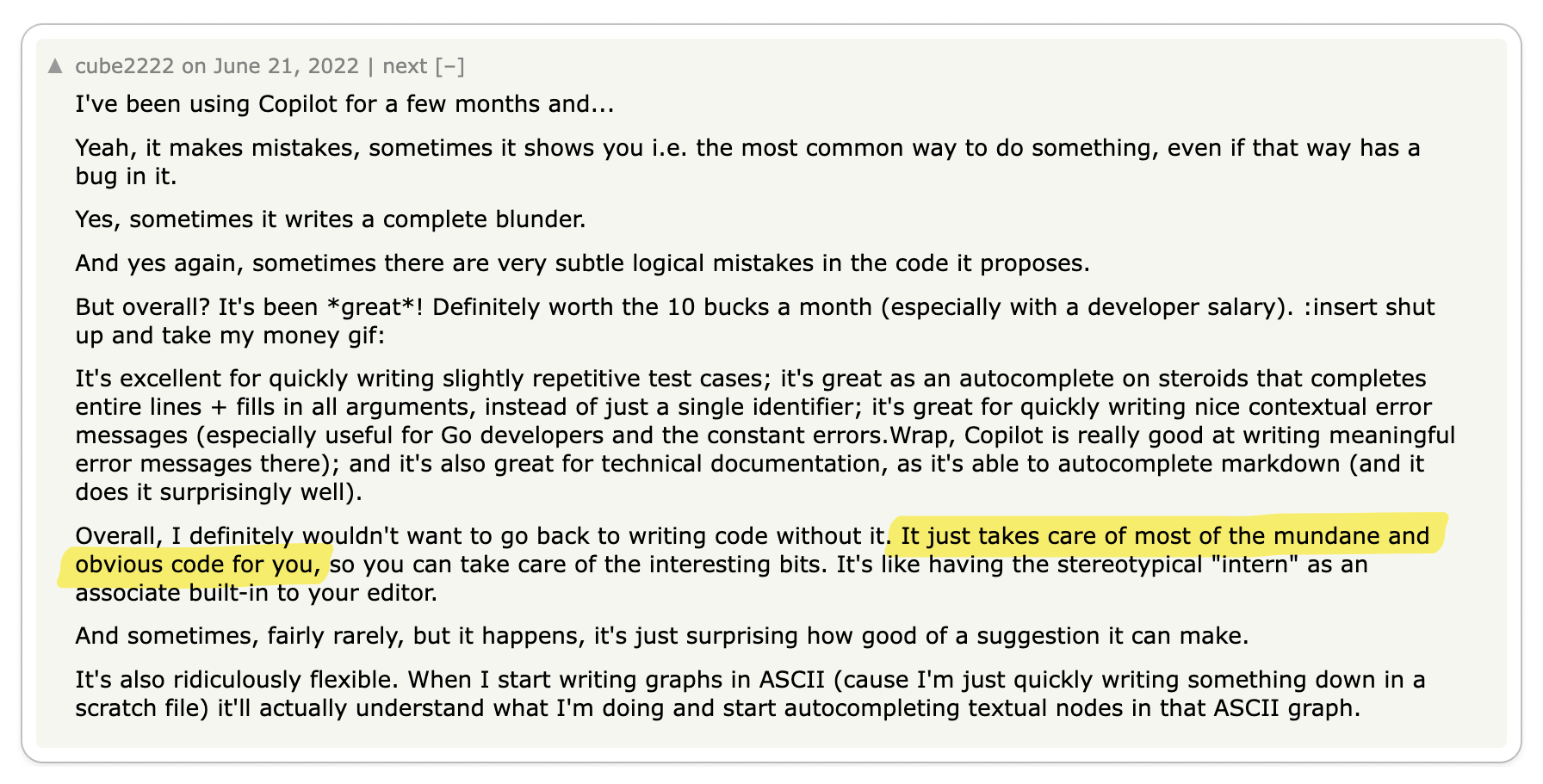

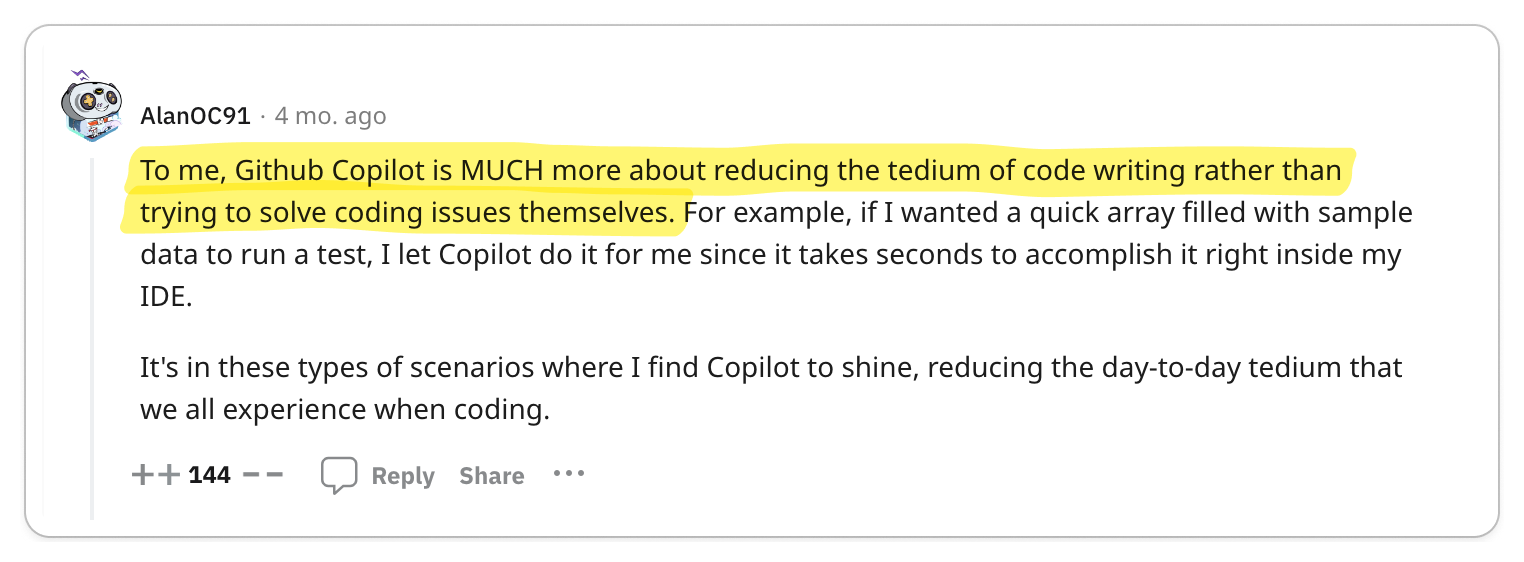

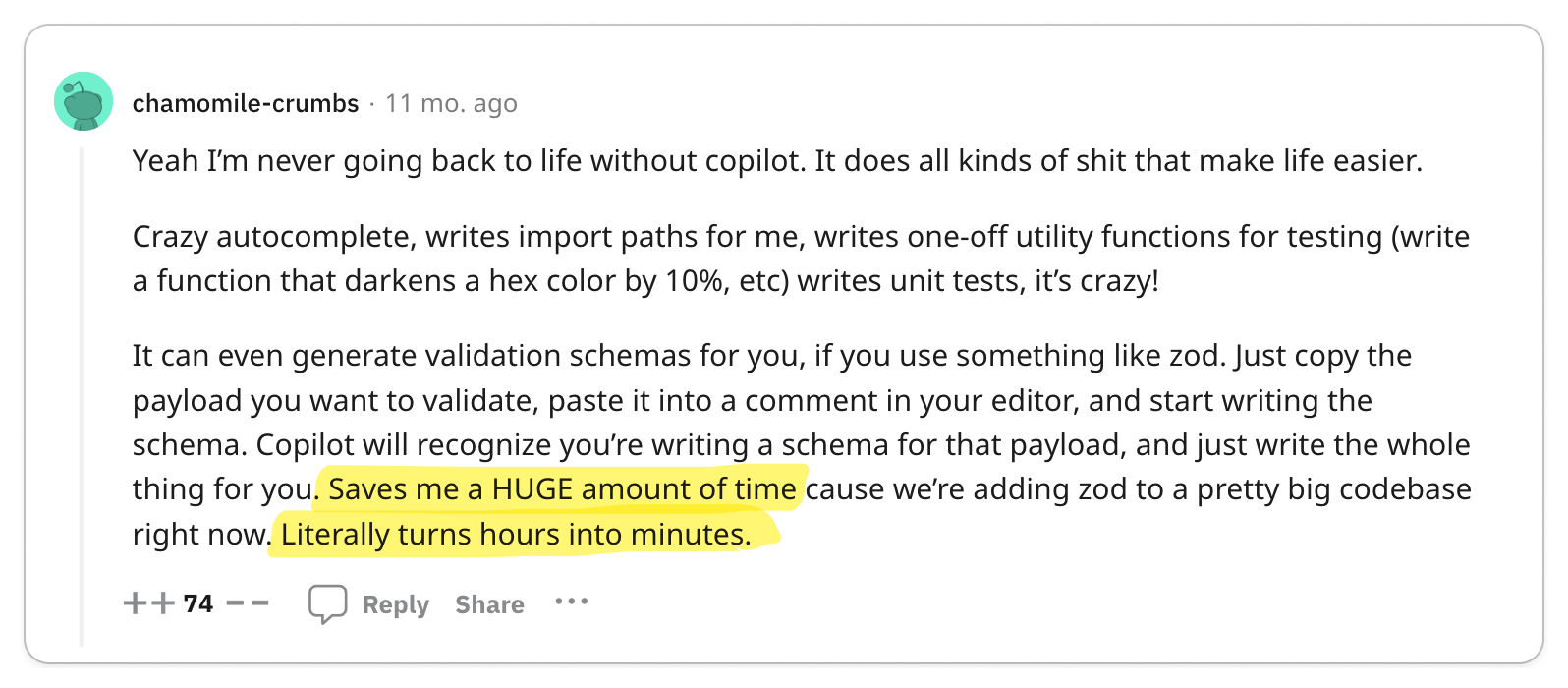

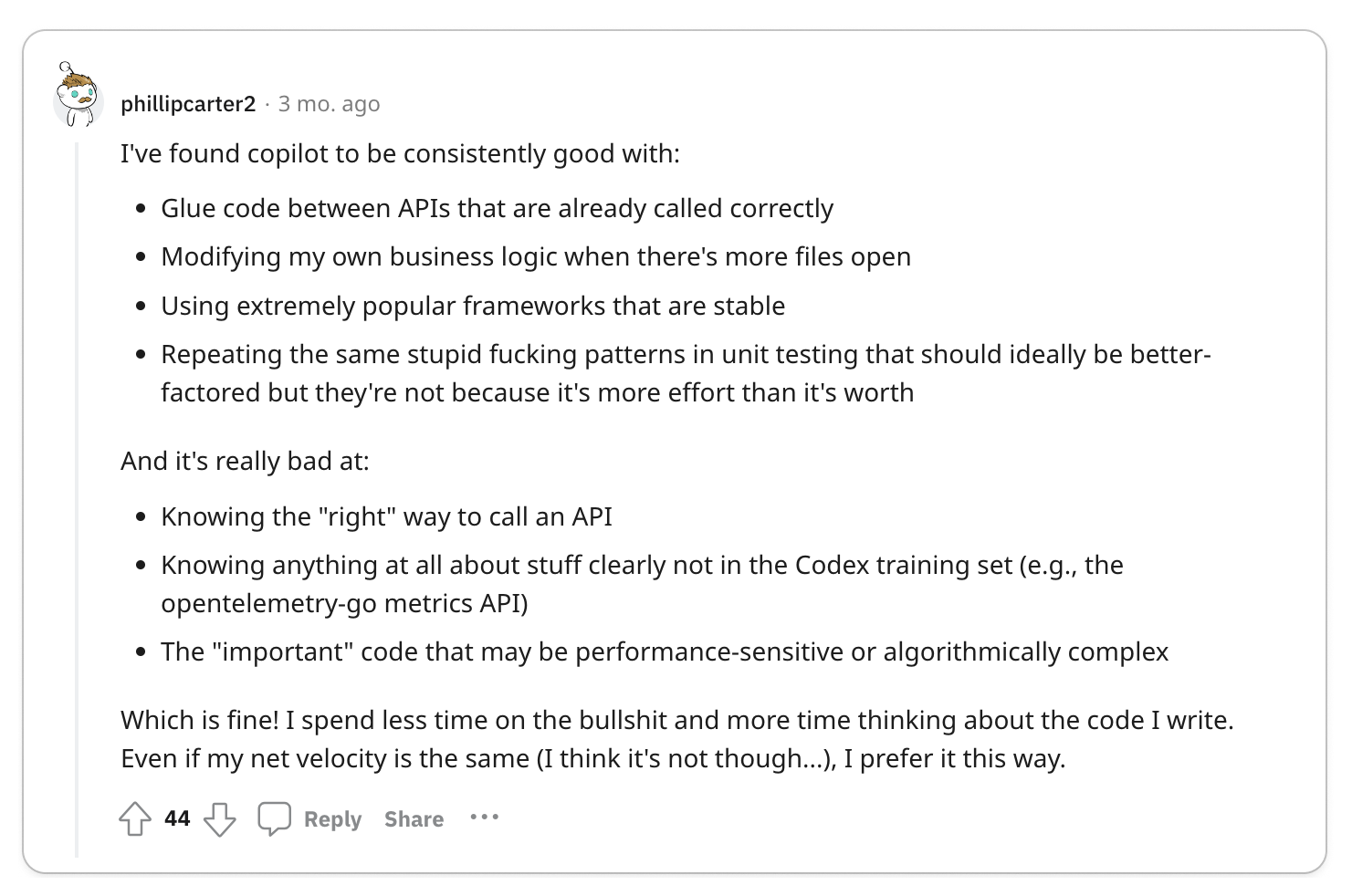

Despite the inaccuracy of LLMs, if you treat Copilot as a tool that can help take care of the boring stuff, it can be a powerful tool.

Key Takeaway 🔑

Copilot increases productivity.

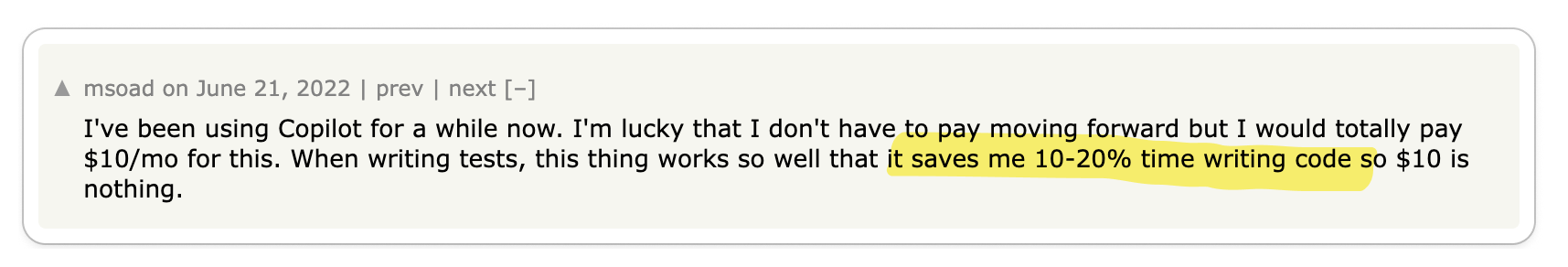

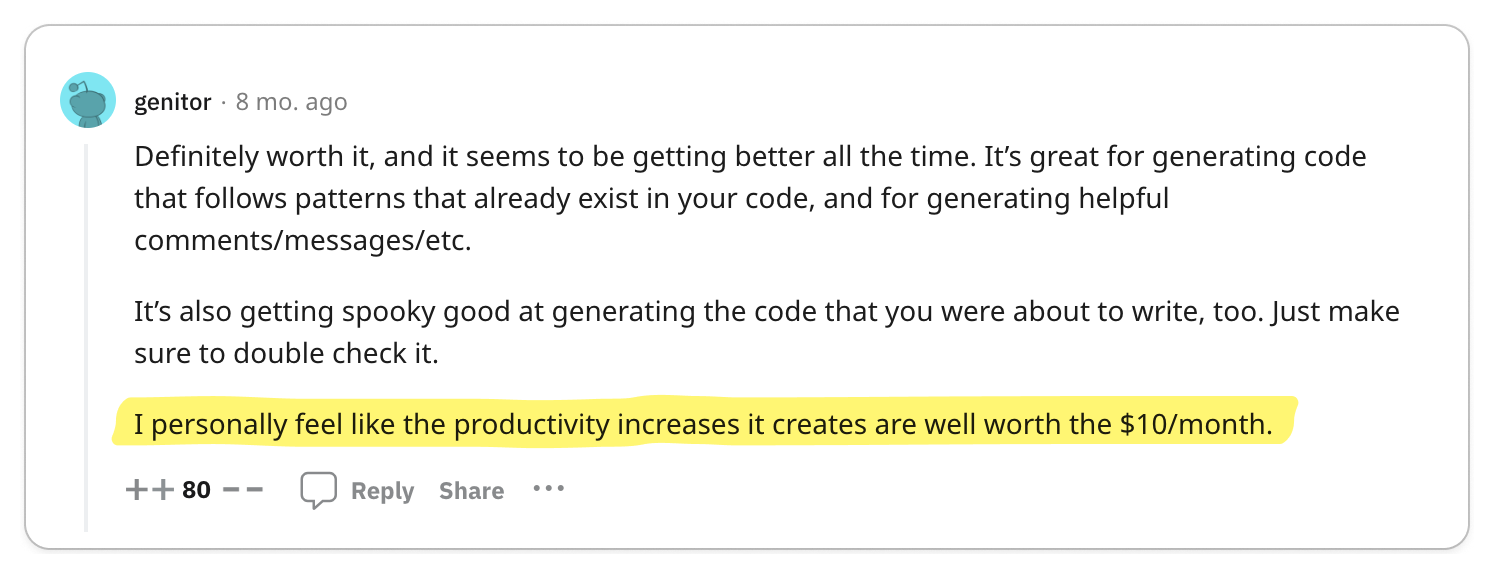

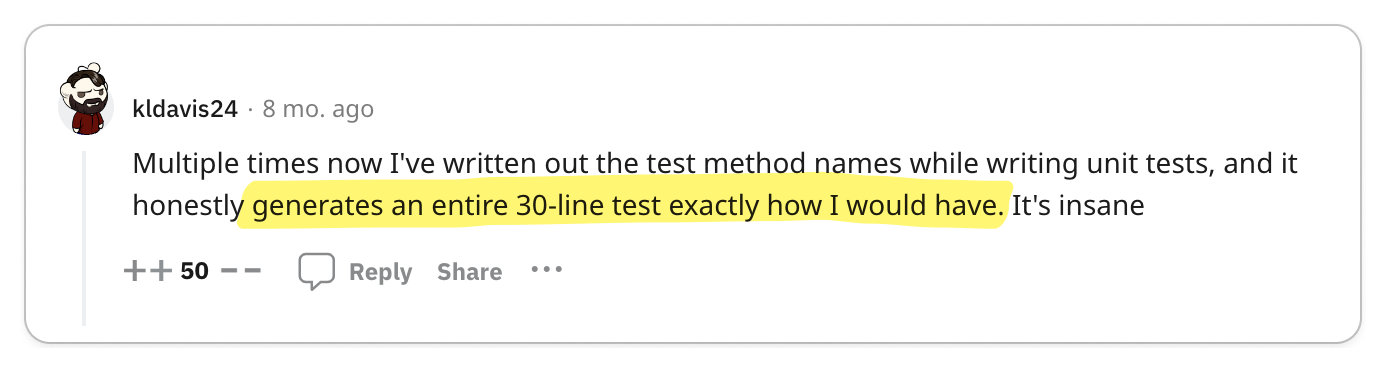

Often, developers face mundane and repetitive tasks. Given enough context, Copilot can do these tasks for you with sufficient accuracy. For some developers, these tasks can be a significant time sink, and Copilot can help you get that time back.

Based on the mentioned 10-20% increase in productivity, such an improvement is substantial. For the sake of a conservative analysis, let's consider the lower bound: if we assume an engineer is paid $100k/yr and becomes just 5% more productive (half of the 10% reference), then with a yearly cost of $100 for Copilot, the tool brings in an added value of $4900 for the company.

👍 Copilot helps you write better software

Copilot can be a great learning tool for junior developers, giving tech leads more time to focus on higher-level tasks. Ultimately leading to better software and happier developers.

Key Takeaway 🔑

Copilot has enough intelligence to help you write better software.

This holds especially true for junior developers still learning the ropes. It can drastically make mundane tasks like documentation and testing easier, giving developers more time to focus on the bigger picture while maintaining a high standard of code quality. Multiplying this effect across an engineering team leads to a higher quality codebase—the ultimate dream for engineering leaders.

🧐 Copilot is like a calculator

Copilot is a tool that can help you solve problems faster, but it is not a replacement for your brain. You still need to know how to solve problems, and you still need to know how to write code.

Key Takeaway 🔑

Just as calculators enable mathematicians to solve problems more quickly, Copilot spares developers from focusing on repetitive tasks.

However, just like calculators, Copilot does not help you make sense of the problem. You still need to know how to solve problems, and you still need to know how to write code.

Conclusion

Opinions on Copilot vary; some see it as a blessing, while others regard it as a curse. For its proponents, Copilot is a valuable tool that enhances coding speed and quality. However, critics argue it introduces more issues than it resolves.

I suspect that the complexity of a task makes a big difference in the quality of output. Working on tasks that require more context will inevitably lead to worse results. Yet, when viewed simply as a tool to handle the mundane aspects of coding, Copilot reveals its potential.

At Konfig, we use Copilot and ChatGPT daily and have found them to be incredibly useful. We are excited to see what the future holds for LLMs and are looking forward to using more tools that help developers write better. We would be very disappointed if we could not use Copilot anymore.